Automated End-to-End Encrypted Curator Backups for Massively-Scaled Elasticsearch, Part 1: Puppet, Curator and AWS S3

December 28, 2018

elasticsearch curator aws puppet rsyslog elasticsearch watcher

Introduction

This is part 1 of a 2-part series on automating end-to-end encrypted Elasticsearch Curator. This first part covers automating end-to-end encrypted curator backups using Puppet and AWS S3. The next part covers monitoring and alerting on backup success with Rsyslog, Kibana and X-Pack Watcher.

Motivation

The main motivation for part 1 of this series is because as of 2018 I was unable to find any internet resource that lays out a conceptual approach for automating curator backups. There are numerous blog posts which could be combined to achieve a curator backup solution, but no post is comprehensive and the Puppet Forge Curator modules are old. However, in the interest of transparency and courtesy, I’d recommend that anyone implementing automated curator backups consider porting the logic I lay out ahead to an orchestrator like Ansible or Terraform because these tools are written for modern SRE and infrastructure engineering paradigms such as immutable infrastructure and security automation.

Requirements

Puppet 3 though it will work on all other versions with minimal tweaking

CentOS 7 (it was also tested on 6; needs very minimal tweaking)

tested on Elasticsearch 5 & 6

Elasticsearch Puppet module versions 5 & 6

access to AWS S3

Caveats

An HTTPS elasticsearch cluster is not required for E2E Encrypted Curator backups, but the method used for Curator-to-cluster and intracluster authorization is assigning the Puppet master root cert and puppet agent client certs and private keys to any and all Elasticsearch and Curator configuration values named (something like) cert, client_cert and private_key, respectively. Shout out to Alan Evans for originally implementing this approach: it works like a charm and I will be writing a brief post on it in the near future.

Ideally, S3 bucket creation and administration would be part of the Puppet automation process, but they are not because Puppet Forge’s AWS module doesn’t handle many services and the ones it does are limited, e.g. its S3 resource can assign a bucket policy, but not a bucket lifecycle configuration, which is important for this project.

All of the code is on my Github.

Curator Backups: End-to-End Encryption

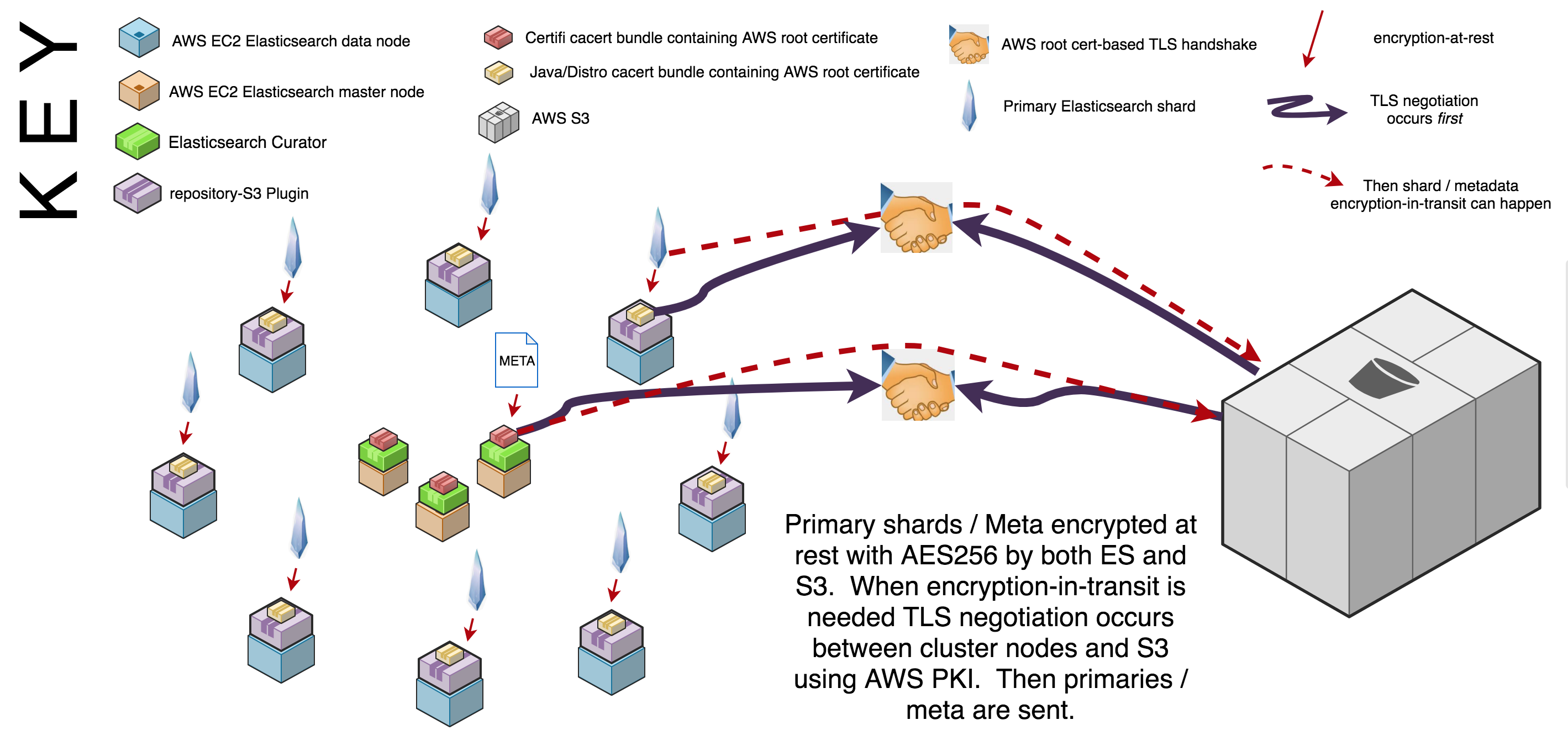

End-to-end encryption refers to any system that implements storage encryption-at-rest and network encryption-in-transit in all places. Curator achieves encryption-at-rest via Elastic’s repository-s3 plugin which encrypts snapshots and related files on EC2 storage before they are sent to S3. S3 has multiple encryption-at-rest options; this tut uses an Amazon S3 (IAM) bucket policy because it encrypts everything that enters the bucket by default, including metadata; the other options only encrypt S3 objects. Both the Elastic repository-s3 plugin, as well as the S3 bucket policy use AES256 as the encryption standard.

Encryption-in-transit between Curator, Elasticsearch and S3 is handled with TLS version 1.2 (still) as of 2018. Amazon is its own certificate authority and many applications include its root public key certificates in their corresponding certificate authority public key certificate “bundles” (ca bundles). If a master is transmitting to S3 then Curator handles TLS negotiation with the Python Certifi module’s ca bundle; if a data node needs to transmit to S3 then Curator hands this request to the repository-s3 plugin on that data node which then uses either openssl.cnf’s, or java’s, specified ca bundle. A diagram of the end-to-end encryption approach is below; click the image to enlarge it:

Curator Backups: Automation

The two most important parts of the encrypted backup process (as opposed to the backup monitoring/alerting process) are controlling which nodes receive Curator and automating repo and snapshot creation for your environments. Curator is only installed on the masters. Controlling Curator installation is done with the Puppet language e.g. if ::hostname =~ host.(dev|qa|prod).domain.com { include ::curator}, Puppet console or Hiera. For this project it is completed via Puppet Console. Automating repo and snapshot creation for your environments is handled with Puppet code. Controlling which nodes receive Curator is one of the most important features of this project because Curator runs as root and has the power to delete indices if configured to do so. While I realize that this article is about backing up Elasticsearch, as opposed to deleting it, it is still prudent to be defensive against anything that has the potential power to blow clusters away. Automating repo metadata naming conventions per your environments automates communication with AWS S3 which is the most crucial communication process required for this backup solution. The diagram below illustrates the approach just described; the diagram is followed by a more specific, step-by-step breakdown of the most important programmatic parts of the backup approach. Click the image to enlarge it:

Automating Encrypted Curator Backups: Installing Elasticsearch’s repository-s3 Plugin

In order to use Curator to send and retrieve snapshots to and from s3, Elasticsearch requires that you install its repository-s3 plugin. The repository-s3 plugin, however, requires a full cluster restart if being installed in an already running cluster. Ideally, this plugin would be baked into an Elasticsearch image and therein a cluster restart would be avoided, but if, as was my case, you’re installing it after cluster initialization then you’ll want to plan for a cluster restart.

Check your cluster’s plugins like so:

sudo /usr/share/elasticsearch/bin/elasticsearch-plugin list --verbose | grep s3

If the plugin is not installed the following block of Puppet code will deploy it for you:

exec {'repository-s3':

command => '/usr/share/elasticsearch/bin/elasticsearch-plugin install --batch repository-s3',

unless => "/bin/test -f ${::elasticsearch::params::plugindir}/repository-s3/plugin-descriptor.properties",

require => class[some_class_that_should_likely_come_before]

}

--batch mode forces Elasticsearch to gain root in order to install the plugin. The unless attribute is a hack to check if the plugin is already installed because Puppet and Elasticsearch will complain if it is. Finally, the require block is for order dependency because Elasticsearch will need to be installed before the plugin install is attempted.

Automating Encrypted Curator Backups: Installing the Curator RPM

Installing Curator on CentOS 7 with Puppet is a breeze. The code blocks below are so trivial I almost didn’t include them, but they do handle internally mirroring Elasticsearch artifacts in yum, a chaining arrows dependency flow and running Curator via cron:

yumrepo { 'curator':

enabled => $manage_repo,

baseurl => $baseurl

gpgcheck => 1,

gpgkey => $gpg_key_file_path

skip_if_unavailable => 0,

require => File[$gpg_key_file_ath]

} ~>

package { 'elasticsearch-curator':

ensure => present,

} ~>

file {'/opt/elasticsearch-curator/curator.yml':

ensure => file,

mode => '0640',

content => template("${module_name}/$path_to_curator.yml.erb"),

} ~>

file {'/opt/elasticsearch-curator/snapshot.yml':

ensure => file,

mode => '0640',

content => template("${module_name}/snapshot.yml.erb"),

} ->

file {'/etc/cron.d/snapshot_alert':

ensure => present,

mode => '0644',

content => "${snapshot_actions_cron_schedule} ${snapshot_actions_cron_user} /usr/bin/curator --config /opt/elasticsearch-curator/curator.yml /opt/elasticsearch-curator/snapshot.yml || /bin/bash /usr/local/bin/snapshot_alert.sh\n",

}

The yumrepo block configures a yum repo securely by forcing GPG key signature checking and since the elasticsearch-curator key is signed by elasticsearch, using this feature is highly recommended. If you’re not mirroring the elasticsearch artifacts then this block isn’t needed.

I’m not a big fan of web templating, but if you’re not using Hiera then you’ll definitely want to templatize your curator.yml and snapshot.yml configuration files and use the content => template(... attribute to deploy them.

The ~> symbols are chaining arrows which are Puppet syntactic sugar for applying the resource on the left first and then the resource on the right; if the left resource changes then the right is refreshed The chaining arrows may be needed to ensure that the /opt/elasticsearch-curator/ dir, which is created by the installation of the elasticsearch-curator rpm, is present before any other files are associated with that directory.

The -> syntax at the end of the dependency chain means apply the resource on the left and then the resource on the right. This ensures that the cron job is only populated if Curator is installed and assumes that a change to the snapshot.yml file doesn’t necessitate a change to the cron job.

The content attribute creates a short-circuit evalution between the Curator cron job and the snapshot_alert.sh script that attempts to execute Curator, but executes snapshot_alert.sh if Curator exits with anything but a code of 0. The snapshot_alert.sh will be covered in the alerting section.

Automating Encrypted Curator Backups: Creating an Encrypted Snapshot Repository

The code block below uses the Elasticsearch _snapshot API to create a repository in an elasticsearch cluster to store encrypted cluster snapshots that will be securely transmitted to, and possibly retrieved from, AWS S3. It’s a curl-based PUT using HTTP Basic Auth to authenticate an elasticsearch user to the cluster. In this PUT we send the required elasticsearch API values via curl’s -d switch, but we are also required to specify an HTTP header for this data being sent via -d that says its Content-Type is application/json. The elasticsearch API requires strict content type declaration for reasons outlined in this post. The values assigned to the command’s json keys are all variables, with the exception of \"type\": \"s3\". The possible values for these variables can be reviewed here. The most important values are ${_snapshot_repository} and "${_snapshot_bucket} because they automate the creation of repos according to host environment which is critical to overall automation. Programmatically handling the assignment of these two values is discussed in the next two subsections. The ${snapshot_bucket_server_side_encrypt} value is variablized, but really must be set to true to actualize end-to-end encryption. The ${snapshot_bucket_canned_acl} assigns a bucket acl of which there are several choices and the best and most secure is private. Finally, the unless attribute is used because Puppet’s exec command is notorious for non-idempotency as discussed in numerous places, including this post here.

exec { "create_${_snapshot_repository}_repo":

path => ['/bin', '/usr/bin' ],

unless => "curl -k -H 'Authorization: Basic ${::basic_auth_password}' https://$(hostname -f):9200/_cat/repositories | grep -q ${_snapshot_repository}",

command => "curl -k -XPUT -H 'Authorization: Basic ${::basic_auth_password}' https://$(hostname -f):9200/_snapshot/${_snapshot_repository} -H 'Content-Type: application/json' -d '{ \"type\": \"s3\", \"settings\": { \"bucket\": \"${_snapshot_bucket}\", \"compress\": \"${snapshot_bucket_compress}\", \"server_side_encryption\": \"${snapshot_bucket_server_side_encrypt}\", \"canned_acl\": \"${snapshot_bucket_canned_acl}\" } }'"

}

Automating Encrypted Curator Backups: Controlling for Elasticsearch repository name

This block automates assigning the $_snapshot_repository value required in the repo creation API call. In the case of a single cluster an if with an else case statement will do. The if clause covers overriding at the Puppet console or hiera level and the else case clause assigns a $_snapshot_repository value based on the domain of the host on which the Curator manifest is being executed.

if $snapshot_repository {

$_snapshot_repository = $snapshot_repository

} else {

case $facts['domain'] {

'dev.domain.com': { $_snapshot_repository = 'dev-snapshot-repo' }

'qa.domain.com': { $_snapshot_repository = 'qa-snapshot-repo' }

'prod.domain.com': { $_snapshot_repository = 'prod-snapshot-repo' }

default: { fail("domain must match \"^some message that covers all required domain cases$\"") }

}

}

If you have multiple cluster use cases then a more complex regexing case statement helps. The block below is a bit hard to read which is why declarative languages shouldn’t be used for scripting. Nonetheless it has been tested against environments with thousands of AWS EC2 instances and works just fine:

if $snapshot_repository {

$_snapshot_repository = $snapshot_repository

} else {

case $facts['hostname'] {

/^hostN.cluster.type.1.(dev|qa|prod).domain.com$/: { $_snapshot_repository = sprintf('%s-cluster-type-1-snapshot-repository', regsubst($::domain, '(dev|qa|prod).domain.com', '\1')) }

/^hostN.cluster.type.2.(dev|qa|prod).domain.com$/: { $_snapshot_repository = sprintf('%s-cluster-type-2-snapshot-repository', regsubst($::domain, '(dev|qa|prod).domain.com', '\1')) }

/^hostN.cluster.type.3.(dev|qa|prod).domain.com$/: { $_snapshot_repository = sprintf('%s-cluster-type-3-snapshot-repository', regsubst($::domain, '(dev|qa|prod).domain.com', '\1')) }

default: { fail("hostname must match \"^some message that covers all required hostname cases$\"") }

}

}

The number of repo names to create is the multiplicative product of the number of cluster types by the number of host environments, e.g. in the block above there are 3 cluster types cluster.type.1, cluster.type.2 and cluster.type.3 and 3 host environments (dev|qa|prod), hence the number of required repo names is 9, i.e dev-cluster-type-1-snapshot-repository, ..., prod-cluster-type-3-snapshot-repository. Puppet’s built-in function regsubst returns the host’s environment substring dev, qa, etc. to sprintf which in turn uses that value to interpolate its string formatted expression '%s-cluster-type-N-snapshot-repository', thereby assigning an environment to the cluster type, e.g. dev-cluster-type-1-snapshot-repository, ..., prod-cluster-type-3-snapshot-repository. The '\1' parameter of the regsubst function is a backreference to the first match between the capture group of (dev|qa|prod).domain.com and the ::domain value. For example, if ::domain == dev.domain.com, then in the case of host1.cluster.type.1.dev.domain.com regsubst would return dev; if ::domain == qa.domain.com then qa would be returned. The default case is to fail if a hostname match does not occur; keep in mind this will prevent puppet catalog compilation meaning that the puppet code will not be executed at all, which provides added defense against creating a repo on a node that has somehow incorrectly entered the node group and has had Curator installed.

Automating Encrypted Curator Backups: Controlling for AWS S3 Bucket Name

The blocks below are identical to the ‘Controlling for Elasticsearch Repository Name’ blocks above, except in this case the S3 bucket name is being assigned:

# Single Cluster use case

if $bucket_name {

$_bucket_name = $bucket_name

} else {

case $facts['domain'] {

'dev.domain.com': { $_bucket_name = 'dev-s3-bucket' }

'qa.domain.com': { $_bucket_name = 'qa-s3-bucket' }

'prod.domain.com': { $_bucket_name = 'prod-s3-bucket' }

default: { fail("domain must match \"^some message that covers all required domain cases$\"") }

}

}

# Multiple cluster use cases

if $bucket_name {

$_bucket_name = $bucket_name

} else {

case $facts['hostname'] {

/^hostN.cluster.type.1.(dev|qa|prod).domain.com$/: { $_bucket_name = sprintf('%s-cluster-type-1-snapshot-repository', regsubst($::domain, '(dev|qa|prod).domain.com', '\1')) }

/^hostN.cluster.type.2.(dev|qa|prod).domain.com$/: { $_bucket_name = sprintf('%s-cluster-type-2-snapshot-repository', regsubst($::domain, '(dev|qa|prod).domain.com', '\1')) }

/^hostN.cluster.type.3.(dev|qa|prod).domain.com$/: { $_bucket_name = sprintf('%s-cluster-type-3-snapshot-repository', regsubst($::domain, '(dev|qa|prod).domain.com', '\1')) }

default: { fail("hostname must match \"^some message that covers all required hostname cases$\"") }

}

}

Automating Encrypted Curator Backups: Configuring Server-side Encryption and Snapshot cleanup in S3

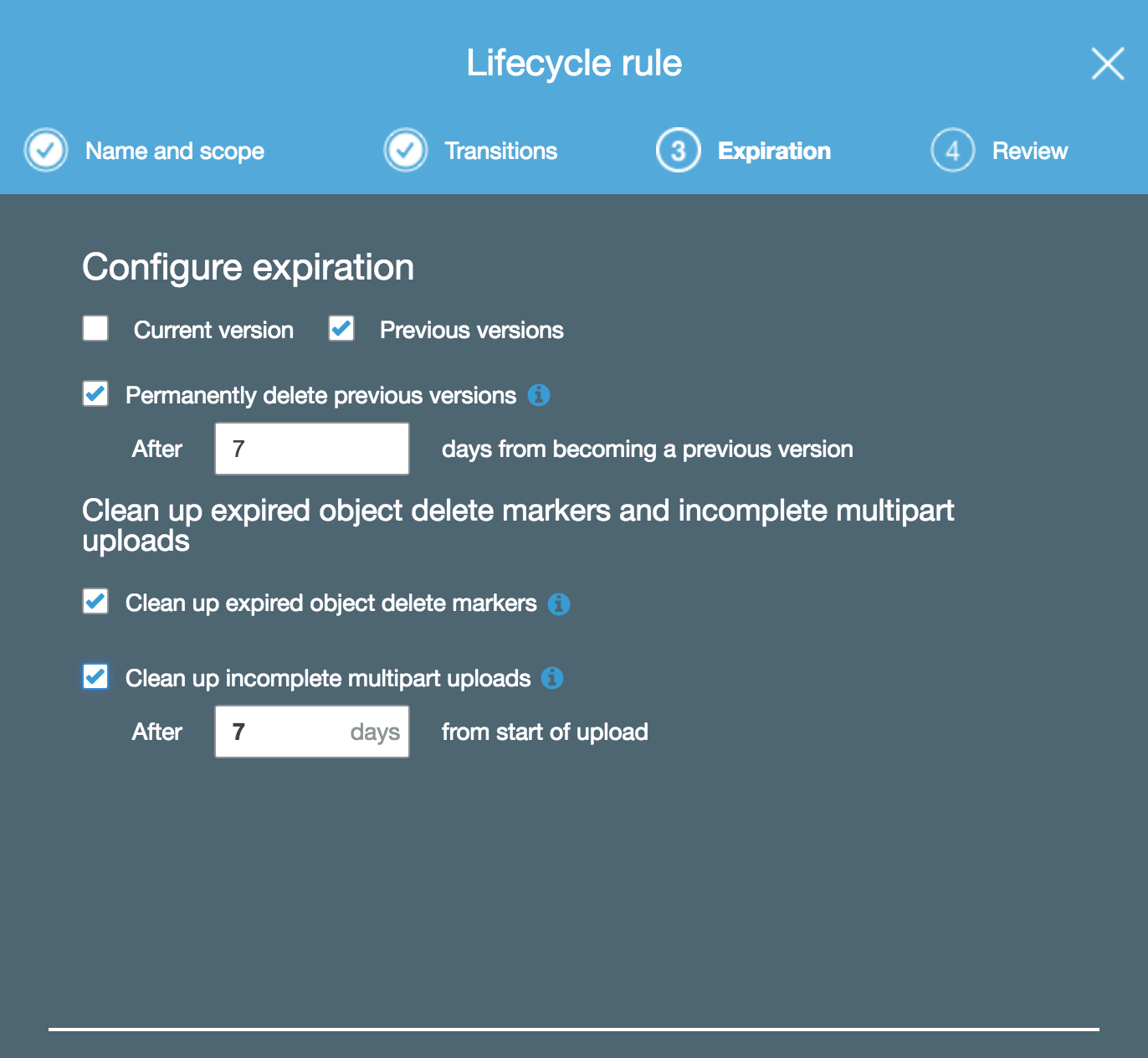

Configuring complete Server-side encryption (SSE) for everything in an S3 bucket is so simple that I’m not going to explain it; just navigate to the Amazon S3 (IAM) bucket policy link posted above and change YourBucket to the name of the S3 bucket which will receive your cluster snapshots. Configuring snapshot cleanup is also trivial in S3 and is done using object lifecycle management. The image below shows a lifecycle rule that deletes any snapshot version excluding the current one that is older than a week. It also deletes multipart uploads.

Puppet is now deploying and configuring Curator and the s3-repository plugin; the s3-repository plugin is encrypting cluster metadata at rest and is using the AWS root cert to send encrypted snapshots to an S3 bucket which has a bucket policy requiring encryption-in-transit and encryption-at-rest: end-to-end encrypted Curator backups have been achieved!

Bonus Section: Two Pro-Tips for Having Confidence in Your Curator Backups

Backing up elasticsearch with Curator doesn’t mean anything if you cannot actually restore your clusters. Doing a full restore on a massive production cluster will be time consuming and potentially expensive, but do yourself a favor and prove that you can deploy new cluster server infrastructure, including load balancers, PKI, etc. and then use Curator or Elasticsearch to do a full restore in at least Dev and QA. Given a massive cluster outage there could be nothing worse then having to explain why the backup solution isn’t working.

Finally, when automating Curator make sure that whatever programmatic logic you use it handles putting the right curator jobs on the right machines. For example, if some machines also have Curator delete indices being run on them, make sure that those jobs don’t end up on clusters that should never have indices deleted.

Conclusion: Monitoring and Alerting are Needed

Now we need to become aware of snapshot failures and alert when they happen; part 2 of this series presents how to do so using a patched version of rsyslog, some bash hacking, Kibana and X-Pack Watcher. If you’re following along with this or in the middle of something similar feel free to email or tweet me because I’d be happy to lend a set of ears or impart any knowledge I have that may be useful.