Automated AWS KMS Encryption for PostgreSQL via Ansible Pull Request 31960 (and bug report 53407)

February 28, 2019

aws kms ansible github databases postgres

Background

Although the scope and efficacy of community Ansible modules can vary the Ansible open source community is second-to-none. Ansible has extremely active IRC channels, most notably for this project’s purposes #ansible and #aws-ansible. Ansible Github is also very responsive and friendly. In fact, thanks to these two communities I was able to achieve a playbook history first!!! Okay…maybe it wasn’t quite “historical”, but with the help of the Ansible community I was able to use pull request 31960 to create a kms key and assign it to a postgres RDS instance. The Ansible open-source community also helped me file bug report 53407. (Here’s a flow diagram I spent too long creating that really isn’t necessary now after proper editing, but I’m too attached to it to rm it.)

Introduction

If comprehensive information security is priority 0 then specifically database security is priority 1. Thanks to orchestration and configuration management tools like Ansible, building and securing database-backed systems has never been easier. What follows are two simple, but comprehensive Ansible roles and a corresponding playbook that can be used to securely create an AWS KMS key and then use that key to encrypt an RDS PostgreSQL 10.6 database. As of February 2019 the default Ansible Amazon module kms doesn’t have the functionality to actually create an AWS KMS key, although the feature and pull requests to do so have been evolving since October of 2017. Given the relatively slow nature of pure open-source development I thought a write-up might be nice in case anyone else insists on automated encryption.

A Nice Little Bash Function Before We Get Started

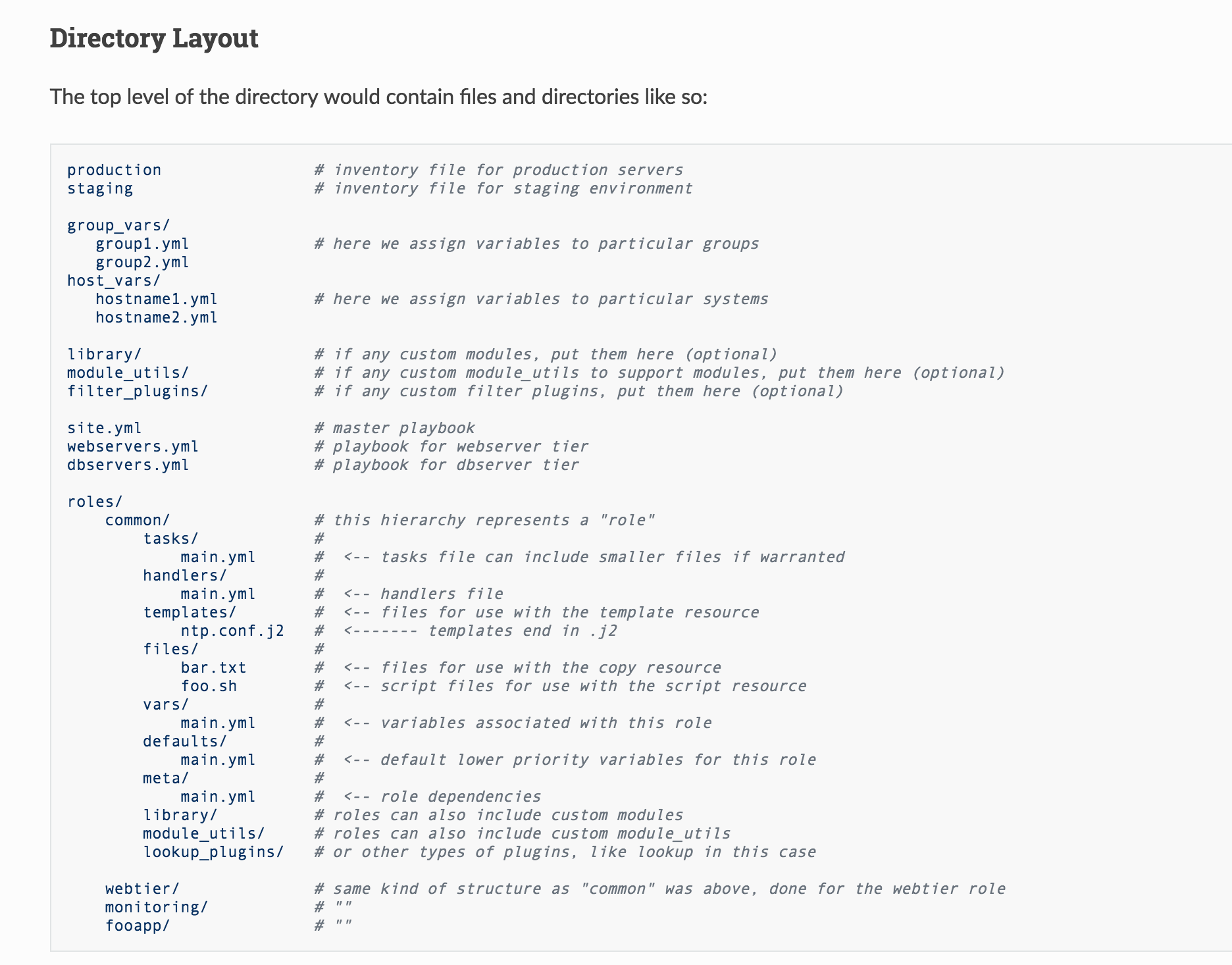

Ansible best practices recommend the following directory structure:

It’s extensive, which is good, but the only part I follow exactly is the roles structure. The following Bash function may be helpful:

~/.bashrc

ar() {

dir=$1

/bin/mkdir -p roles/$dir/{tasks,handlers,templates,files,vars,defaults,meta,library,module_utils,lookup_plugins}

/usr/bin/touch roles/$dir/{tasks,handlers,vars,defaults,meta}/main.yml

}

ar is an abbreviation of ansible role; this little dudette takes a single positional parameter argument like create_rds and creates the directory and file structure per the best practices mentioned above.

Patching aws_kms.py using Ansible Pull Request 31960

Our goal with Ansible is to create an AWS KMS key using Ansible module aws_kms, but before we can do so we need to first find the aws_kms.py file on our Ansible box:

jonas@jonas-mac ~/development/personal/git/ansible ∫ sudo find / -name 'aws_kms.py'

Password:

/usr/local/Cellar/ansible/2.7.6/libexec/lib/python3.7/site-packages/ansible/modules/cloud/amazon/aws_kms.py

Now that we have the file location we need to apply the following patch from Pull Request 31960:

diff --git a/library/aws_kms.py b/library/aws_kms.py

index 89d347fd..05acf1bd 100755

--- a/library/aws_kms.py

+++ b/library/aws_kms.py

@@ -851,6 +851,7 @@ def main():

tags=dict(type='dict', default={}),

purge_tags=dict(type='bool', default=False),

grants=dict(type='list', default=[]),

+ policy=dict(),

purge_grants=dict(type='bool', default=False),

state=dict(default='present', choices=['present', 'absent']),

)

Copy the patch above into a file and then use the following patch command to patch aws_kms.py. Feel free to add the --dry-run switch if you have never used the patch command before. Note: if you do not use the -i switch below as shown below, then make sure you edit the patch above to match your aws_kms.py filepaths.

jonas@jonas-mac ~/development/personal/git/ansible ∫ patch -i aws_kms.patch $(sudo find / -name aws_kms.py)

patching file /usr/local/Cellar/ansible/2.7.6/libexec/lib/python3.7/site-packages/ansible/modules/cloud/amazon/aws_kms.py

Creating an AWS KMS key using Ansible Pull Request 31960

With our aws_kms.py file patched we can now create our AWS KMS key. This tut assumes a dir structure that essentially follows Ansible best practices and uses the Bash function I provided above.

Copy and paste the following code into your roles/your_aws_kms_key_creation_role/tasks/main.yml file:

---

# standard template

# for kms key creation

- name: create_aws_kms_key_aws_secrets_file

include_vars:

file: vault.yml

- name: create_aws_kms_key

aws_kms:

aws_access_key: "{{ create_aws_kms_key_aws_access_key }}"

aws_secret_key: "{{ create_aws_kms_key_aws_secret_key }}"

description: "{{ create_aws_kms_key_description }}"

enabled: "{{ create_aws_kms_key_enabled }}"

grants:

- name: "{{ create_aws_kms_key_grants_dict['create_aws_kms_key_grants_dict_name'] }}"

grantee_principal: "{{ create_aws_kms_key_grants_dict['create_aws_kms_key_grants_dict_grantee_principal'] }}"

operations: "{{ create_aws_kms_key_grants_dict['create_aws_kms_key_grants_dict_operations_list'] }}"

key_alias: "{{ create_aws_kms_key_key_alias }}"

mode: "{{ create_aws_kms_key_mode }}"

role_name: "{{ create_aws_kms_key_role_name }}"

state: "{{ create_aws_kms_key_state }}"

register: out

- debug: var=out.key_id

...

Then create a vault.yml file in your roles/your_aws_kms_key_creation_role/vars/ dir and in it copy and paste the following code, replacing the dummy values with your aws secrets:

---

create_aws_kms_key_aws_access_key: YOUR_AWS_ACCESS_KEY

create_aws_kms_key_aws_secret_key: TOUR_AWS_SECRET_KEY

...

Now we need to encrypt this vault.yml file with ansible-vault:

jonas@jonas-mac ~/development/personal/git/ansible ∫ ansible-vault encrypt roles/create_aws_kms_key/vars/vault.yml

New Vault password:

Confirm New Vault password:

Encryption successful

If you’ve adjusted your file name and dir path values accordingly you will have just successfully encrypted your AWS secrets which is a crucial practice that is often overlooked.

At this point we have our task file and our secrets file. Now all we need is a default variables file from which our task file can read all of its needed parameter values. After creating that I’ll do a brief breakdown of the core code components.

Copy and paste the following code block into roles/your_aws_kms_key_creation_role/defaults/main.yml

---

create_aws_kms_key_description: >

keys created for rds postgres

instances

create_aws_kms_key_enabled: true

create_aws_kms_key_key_alias: &create_aws_kms_key_key_alias your_aws_kms_key_name

create_aws_kms_key_mode: grant

create_aws_kms_key_role_name: &create_aws_kms_key_role_name arn:aws:iam::aws:policy/AWSKeyManagementServicePowerUser

create_aws_kms_key_state: present

create_aws_kms_key_grants_dict:

create_aws_kms_key_grants_dict_name: *create_aws_kms_key_role_name

create_aws_kms_key_grants_dict_grantee_principal: rds.us-east-1.amazonaws.com

create_aws_kms_key_grants_dict_operations_list: [DescribeKey, CreateGrant, GenerateDataKey, RetireGrant, Encrypt, ReEncryptTo, Decrypt, GenerateDataKeyWithoutPlaintext, Verify, ReEncryptFrom, Sign]

Even though it’s likely obvious because this tut assumes a fair amount of prior knowledge, let me do a quick breakdown of the code thus far:

Using the include_vars Directive To Read Our vault.yml File

---

# standard template

# for kms key creation

- name: create_aws_kms_key_aws_secrets_file

include_vars:

file: vault.yml

We created a secrets file in vault.yml and encrypted it with a password via ansible-vault, but we need our task file to be able to see these secrets (after we have decrypted them at runtime which is handled later). There are several ways to achieve this, for example we could have created a roles/your_aws_kms_key_creation_role/defaults/main/{main.yml,vault.yml} and foregone the include_vars directive because ansible will autoload every yaml file in a main dir assuming the rest of the dir structure is configured to Ansible’s autoloading needs (which themselves can of course be configured). I chose to use include_vars, however, because it loads variables dynamically from (only) within a task file and since our tasks in this playbook are so few it’s a nice surgical option that allows the task file to see our secrets.

Assigning grants, state: present and registering Return Values For Later Access

- name: create_aws_kms_key

aws_kms:

aws_access_key: "{{ create_aws_kms_key_aws_access_key }}"

aws_secret_key: "{{ create_aws_kms_key_aws_secret_key }}"

description: "{{ create_aws_kms_key_description }}"

enabled: "{{ create_aws_kms_key_enabled }}"

grants:

- name: "{{ create_aws_kms_key_grants_dict['create_aws_kms_key_grants_dict_name'] }}"

grantee_principal: "{{ create_aws_kms_key_grants_dict['create_aws_kms_key_grants_dict_grantee_principal'] }}"

operations: "{{ create_aws_kms_key_grants_dict['create_aws_kms_key_grants_dict_operations_list'] }}"

key_alias: "{{ create_aws_kms_key_key_alias }}"

mode: "{{ create_aws_kms_key_mode }}"

role_name: "{{ create_aws_kms_key_role_name }}"

state: "{{ create_aws_kms_key_state }}"

register: out

- debug: var=out.key_id

AWS KMS has grants for controlling key permissions. The aws_kms.py module will assign default grant values to your keys if you let it and indeed our values for create_aws_kms_key_grants_dict_operations_list are effectively admin permissions, but I still almost always prefer to specify all security-related parameters for control granularity and ease of permission tuning.

Pull request 31960 now allows aws_kms module to create keys via assignment of the value present to the state parameter, hence our state: "{{ create_aws_kms_key_state }}" keypair. This is what our patching was for!

register: out uses the register directive to save all of our aws_kms task’s return values to a dictionary in the out variable. Later we’re going to access from this out dictionary our newly created AWS KMS key’s key id and assign it to our RDS code to automate our postgres encryption.

The - debug: var=out.key_id directive will print the key id of our key. An identical directive will be used in our postgres task below and thus we can compare during runtime if the correct key id is being assigned to our postgres instance.

Creating an AWS RDS PostgreSQL 10.6 db.t2.small Instance and Encrypting it with our New AWS KMS KEY ID

We have a role that creates a key. Now we need a role that uses that key to encrypt postgres.

Copy and paste the code block below into your roles/your_aws_rds_postgres_creation_role/tasks/main.yml file. The comments in the code block are noted in the bug report and pro-tips section at the end.

---

# using rds_instance

# module to create

# postgresql instances bc

# the rds module does not

# support encryption.

# https://github.com/ansible/ansible/issues/24415

# license_model bug report explained

# in its own section below

- name: manage_aws_rds_postgresql_vault_secrets_file

include_vars:

file: vault.yml

- name: create_aws_rds_postgresql

rds_instance:

allocated_storage: "{{ manage_aws_rds_postgresql_allocated_storage }}"

allow_major_version_upgrade: "{{ manage_aws_rds_postgresql_allow_major_version_upgrade }}"

apply_immediately: "{{ manage_aws_rds_postgresql_apply_immediately }}"

auto_minor_version_upgrade: "{{ manage_aws_rds_postgresql_auto_minor_version_upgrade }}"

aws_access_key: "{{ manage_aws_rds_postgresql_aws_access_key }}"

aws_secret_key: "{{ manage_aws_rds_postgresql_aws_secret_key }}"

backup_retention_period: "{{ manage_aws_rds_postgresql_backup_retention_period }}"

db_instance_class: "{{ manage_aws_rds_postgresql_db_instance_class }}"

db_instance_identifier: "{{ manage_aws_rds_postgresql_db_instance_identifier }}"

db_name: "{{ manage_aws_rds_postgresql_db_name }}"

db_subnet_group_name: "{{ manage_aws_rds_postgresql_db_subnet_group_name }}"

enable_performance_insights: "{{ manage_aws_rds_postgresql_enable_performance_insights }}"

engine: "{{ manage_aws_rds_postgresql_engine }}"

engine_version: "{{ manage_aws_rds_postgresql_engine_version }}"

kms_key_id: "{{ manage_aws_rds_postgresql_kms_key_id }}"

#license_model: "{{ manage_aws_rds_postgresql_license_model }}"

master_user_password: "{{ manage_aws_rds_postgresql_master_user_password }}"

master_username: "{{ manage_aws_rds_postgresql_master_username }}"

new_db_instance_identifier: "{{ manage_aws_rds_postgresql_new_db_instance_identifier }}"

port: "{{ manage_aws_rds_postgresql_port }}"

preferred_backup_window: "{{ manage_aws_rds_postgresql_preferred_backup_window }}"

preferred_maintenance_window: "{{ manage_aws_rds_postgresql_preferred_maintenance_window }}"

publicly_accessible: "{{ manage_aws_rds_postgresql_publicly_accessible }}"

state: "{{ manage_aws_rds_postgresql_state }}"

storage_encrypted: "{{ manage_aws_rds_postgresql_storage_encrypted }}"

storage_type: "{{ manage_aws_rds_postgresql_storage_type }}"

tags:

db_name: "{{ manage_aws_rds_postgresql_tags['db_name'] }}"

validate_certs: "{{ manage_aws_rds_postgresql_validate_certs }}"

vpc_security_group_ids: "{{ manage_aws_rds_postgresql_vpc_security_group_ids }}"

- debug: var=kms_key_id

...

Now copy and paste the code blocks below into your roles/your_aws_rds_postgres_creation_role/vars/vaults.yml and roles/your_aws_rds_postgres_creation_role/defaults/main.yml files, respectively, and encrypt your vaults.yml again as we previously did above.

---

manage_aws_rds_postgresql_aws_access_key: AWS_ACCESS_KEY

manage_aws_rds_postgresql_aws_secret_key: AWS_SECRET_KEY

manage_aws_rds_postgresql_master_user_password: DATABASE_MASTER_USER_PASSWORD

...

---

manage_aws_rds_postgresql_allocated_storage: 20

manage_aws_rds_postgresql_allow_major_version_upgrade: False

manage_aws_rds_postgresql_apply_immediately: False

manage_aws_rds_postgresql_auto_minor_version_upgrade: False

manage_aws_rds_postgresql_availability_zone:

manage_aws_rds_postgresql_aws_access_key:

manage_aws_rds_postgresql_aws_secret_key:

manage_aws_rds_postgresql_backup_retention_period: 0

manage_aws_rds_postgresql_db_instance_class: db.t2.small

manage_aws_rds_postgresql_db_instance_identifier: ansible-rds-postgres-10-6-non-HA-encrypted-test-instance

manage_aws_rds_postgresql_db_name: ansible_rds_postgres_10_6_non_HA_encrypted_test_instance

manage_aws_rds_postgresql_enable_performance_insights: False

manage_aws_rds_postgresql_engine: postgres

manage_aws_rds_postgresql_engine_version: 10.6

manage_aws_rds_postgresql_kms_key_id: "{{ out.key_id }}"

#manage_aws_rds_postgresql_license_model: postgresql-license

manage_aws_rds_postgresql_master_username: jonas

manage_aws_rds_postgresql_port: 5432

manage_aws_rds_postgresql_preferred_backup_window: '00:00-00:30'

manage_aws_rds_postgresql_preferred_maintenance_window: 'sun:00:30-sun:01:00'

manage_aws_rds_postgresql_publicly_accessible: True

manage_aws_rds_postgresql_state: present

manage_aws_rds_postgresql_storage_encrypted: True

manage_aws_rds_postgresql_storage_type: gp2

manage_aws_rds_postgresql_tags:

db_name: ansible_rds_postgres_10_6_non_HA_encrypted_test_instance

manage_aws_rds_postgresql_validate_certs: True

manage_aws_rds_postgresql_vpc_security_group_ids: your_vpc_security_group_ids_list

...

By default the rds_instance module launches RDS instances into the default VPC, which is almost certainly not what you want because the default VPC often does not conform to enterprise security standards unless it has been altered to do so. If you do not want to launch into the default VPC then a parameter that knows about VPC-related resources must be supplied in your create_rds role. Some examples of these parameters are db_subnet_group_name (string) and vpc_security_group_ids (list). I have specified a dummy value above: manage_aws_rds_postgresql_vpc_security_group_ids: your_vpc_security_group_ids_list

Two simple lines from our roles/your_aws_rds_postgres_creation_role/ represent the culmination of this entire project:

# ../defaults/main.yml

manage_aws_rds_postgresql_kms_key_id: "{{ out.key_id }}"

# ../tasks/main.yml

kms_key_id: "{{ manage_aws_rds_postgresql_kms_key_id }}"

First we grab the key_id from our variable out which we registered in our key creation role (which was only possibly via PR 31960) and then we assign that id to the kms_key_id parameter in our rds_instance task. With this logic in place we’re ready to create a playbook.

A Playbook to Execute Both Roles

Our playbook merely needs to list our two roles:

---

- hosts: local

roles:

- create_aws_kms_key

- manage_aws_rds_postgresql

...

Et voilà: we have a role to create a key, a role to create an encrypted rds instance with that key and a playbook to execute both roles. Now all that’s left to do is run ansible-playbook with the --ask-vault-pass switch to decrypt our secrets and run our playbook:

jonas@jonas-mac ~/development/personal/git/ansible ∫ ansible-playbook playbooks/create_aws_rds_postgresql_with_new_kms.yml --ask-vault-pass

Vault password:

PLAY [local] **********************************************************************************************************

TASK [Gathering Facts] ************************************************************************************************

ok: [localhost]

TASK [create_aws_kms_key : create_aws_kms_key_aws_secrets_file] *******************************************************

ok: [localhost]

TASK [create_aws_kms_key : create_aws_kms_key] ************************************************************************

changed: [localhost]

TASK [create_aws_kms_key : debug] *************************************************************************************

ok: [localhost] => {

"out.key_id": "#############-8e685a1059c9"

}

TASK [manage_aws_rds_postgresql : manage_aws_rds_postgresql_vault_secrets_file] ***************************************

ok: [localhost]

TASK [manage_aws_rds_postgresql : create_aws_rds_postgresql] **********************************************************

TASK [manage_aws_rds_postgresql : debug] ******************************************************************************

ok: [localhost] => {

"kms_key_id": "#############-8e685a1059c9"

}

RDS instances take between 5 and 10 minutes to fully boot. If the roles and playbook execute successfully your play will end something like:

TASK [manage_aws_rds_postgresql : manage_aws_rds_postgresql_vault_secrets_file] ***************************************

ok: [localhost]

TASK [manage_aws_rds_postgresql : create_aws_rds_postgresql] **********************************************************

changed: [localhost]

PLAY RECAP ************************************************************************************************************

localhost : ok=6 changed=2 unreachable=0 failed=0

Pro-Tips

Don’t specify the license_model Parameter; At Least Not Yet: Bug Report 53407

There is only one allowed license value in AWS RDS PostgreSQL: “postgresql-license”, however currently, if that value is assigned to the Ansible rds_instance module’s license_model parameter, Ansible will throw:

"msg": "value of license_model must be one of: license-included, bring-your-own-license, general-public-license, got: postgresql-license"

And if you then change the license_model value to one of the values listed in the stderr above, Ansible will throw:

"msg": "Unable to create DB instance: An error occurred (InvalidParameterCombination) when calling the CreateDBInstance operation: Invalid license model 'general-public-license' for engine 'postgres'. Valid license models are: postgresql-license"

As mentioned Ansible has very active Github and Freenode communities and I recently hopped on the latter and let one of the main rds_instance module maintainers know about this. He had me create bug report 53407 which was linked to at the beginning of the tut if you want to follow along with that. Thankfully the maintainer let me know that simply omitting this parameter would by default assign the only allowed value anyway: postgresql-license. Although an admittedly elementary fix, initially I was quite flabbergasted by the contradictory stderr messages I was receiving.

No Shade, but Probably Only Use the rds_instance Module

The rds and rds_instance modules can both create rds instances, but only the rds_instance module can create encrypted instances. Get the sauce here. Further complicating things is that although both modules handle many of the same parameter options (avaialble via boto3), they both also handle different ones. Thus far I have never used the rds module because I only spin encrypted databases.

Conclusion

Ansible Pull Request 31960 and a little bit of effort make achieving automated PostgreSQL encryption possible, which is great because database encryption should be mandatory. If questions or issues arise with something in this project or with your AWS or Ansible dev environments as they relate to complete this projecting, feel free to reach out to me. I also highly recommend #ansible, #aws-ansible or ##aws via freenode.